Health

Overview

The Health section in BotStudio helps monitor the chatbot’s performance, detect errors, and suggest improvements. Regularly reviewing this section ensures the chatbot functions optimally and maintains high-quality user interactions.

The Health section is located in the Analyze & Debug section of the main navigation in BotStudio.

Health Features

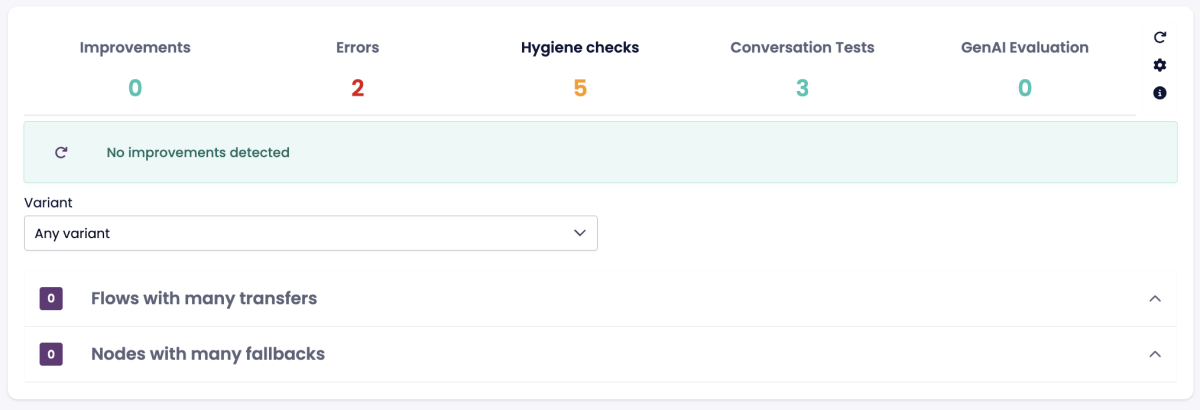

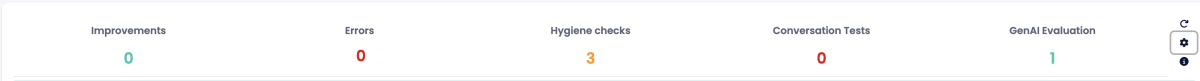

The Health section provides five key types of checks:

- Improvements – Recommendations for enhancing chatbot performance.

- Errors – Identifies and highlights potential issues that could affect chatbot functionality.

- Hygiene Checks – Ensures chatbot flows and configurations are optimized.

- Conversation Tests – Validates chatbot responses to ensure consistency.

- GenAI Evaluation – Evaluates AI-generated responses to fine-tune accuracy.

Health Page Controls

At the top right of the Health page, you will find three key buttons:

- Recompute Checks – Refreshes all health assessments.

- Edit Health Settings – Adjusts thresholds for various checks (e.g., number of draft responses allowed).

- View Health Descriptions – Provides details on what each health check entails.

If the chatbot has multiple variants or supports different languages, a selection menu allows switching between them for targeted analysis.

Diagnostics

The Diagnostics bar displays system-wide issues affecting the chatbot. Depending on the selected tab, this bar provides an overview of key findings.

- Green Bar: No detected errors.

- Red Bar: Indicates errors that need attention, such as syntax issues or misconfigured authentication.

Common Issues Detected:

- Identically named nodes.

- BotScript syntax errors.

- Nodes requiring authentication when global authentication is disabled.

- Missing fallback for authentication failures.

- Compilation errors preventing chatbot deployment.

Screenshot Placeholder: Insert an image of a Diagnostics bar with error details.

Improvements

The Improvements tab highlights areas for chatbot optimization. This includes:

- Flows with Many Transfers – Identifies frequent chatbot-to-agent handovers and suggests improvements.

- Nodes with Many Fallbacks – Detects nodes where the chatbot frequently fails to understand user input, suggesting enhancements to matching rules and intent classification.

- Explore Feature – Groups common words into topics, providing insights into new potential chatbot flows.

Screenshot Placeholder: Insert an image of the Improvements tab with sample suggestions.

Errors

The Errors tab lists critical issues that can affect chatbot performance. These include:

- Syntax errors preventing deployment.

- Misconfigured nodes.

- Authentication-related failures.

Errors are categorized based on their severity. Some may prevent the chatbot from functioning, while others may cause minor inconsistencies.

Screenshot Placeholder: Insert an image showing an example error message with troubleshooting details.

Hygiene Checks

Hygiene checks provide recommendations for keeping the chatbot clean and efficient. This includes:

- Ensuring all classifiers are recently trained.

- Checking for redundant or outdated nodes.

- Identifying broken links within chatbot flows.

Unnecessary checks can be disabled if they are not relevant to the chatbot.

Screenshot Placeholder: Insert an image of the Hygiene Checks section with examples.

Conversation Tests

Conversation tests allow chatbot administrators to validate the chatbot’s responses using pre-recorded interactions. These tests help ensure that chatbot behavior remains consistent after updates.

Two Types of Tests:

- Test Path – Verifies that the chatbot follows the same node sequence in repeated interactions.

- Test Content – Ensures chatbot responses remain identical when given the same input.

Running a Conversation Test:

- Engage in a test conversation with the chatbot.

- Verify that responses are as expected.

- Click Use This Conversation as a Test Case.

- Assign a test name and specify whether to test the path, content, or both.

- Save and run tests whenever chatbot updates occur.

Screenshot Placeholder: Insert an image showing the Conversation Tests section with test creation steps.

Updating Conversation Tests

If chatbot responses change over time, existing tests may need updates. If a test fails, administrators can either:

- Adjust chatbot responses to align with the expected outcome.

- Update the test case to reflect the chatbot’s latest response behavior.

Screenshot Placeholder: Insert an image showing a failed conversation test with an update option.

GenAI Evaluation

The GenAI Evaluation feature helps assess chatbot-generated responses using AI models. This tool is crucial for refining chatbot accuracy and optimizing response quality.

Key Features:

- Generated Reply Quality – Evaluates response correctness based on set thresholds.

- Articles Presented – Analyzes the quality of suggested content based on user queries.

- Threshold Adjustments – Fine-tunes AI model performance for optimal results.

Running a GenAI Evaluation:

- Activate Generative AI API in the demo panel.

- Enter a test message and add it to Generative AI Tests.

- View evaluation results on the GenAI Evaluation page.

Screenshot Placeholder: Insert an image of the GenAI Evaluation interface with test results.

Adjusting Thresholds

Thresholds determine whether chatbot responses are classified as:

- Good – High confidence and accurate response.

- Acceptable – Somewhat relevant but could be improved.

- Unacceptable – Poor response requiring adjustment.

- No Reply Sent – Below threshold and not displayed to the user.

Administrators can experiment with threshold values to optimize chatbot performance.

Screenshot Placeholder: Insert an image of Threshold settings in GenAI Evaluation.

Best Practices for Maintaining Chatbot Health

- Regularly recompute health checks to catch new issues early.

- Use conversation tests to validate chatbot performance after updates.

- Monitor error logs to prevent disruptions.

- Fine-tune AI thresholds to balance accuracy and relevance.

- Review hygiene checks periodically to maintain an optimized chatbot structure.